We combine these with our skill and experience to create a service designed to deliver real value to our clients. We also use conceptual frameworks, house-made scripts and checklists internally to guide us in the process too. For example, we adopt the “Mitre Att&ck” framework as a guide.

During our discussions and most importantly when working together, we will initially set “rules of engagement” which are very important to manage safety and drive a tangible outcome. Given that a Red Team is a simulation, we will not use real malware, physical techniques or other methods or tools that are potentially destructive.

In establishing a goal-orient approach, we work together to establish a series of scenarios that we agree up-front will be executed and determine what is to be accomplished. Trophies such as files (simulating gaining access to a trade secret); gaining access to IP / critical data stores that are not public; gaining Domain Admin / Root, are key. We then, in collaboration, need to ask ourselves and establish:

- Options: What sort of simulations are to be used? (For example – Social Engineering, Phishing, Vishing etc.)

- Transparency: How much assistance / direction will Chaleit be given?

- Vectors: Through what ways could the frontiers of our clients be breached?

In a little more detail, the process evolves into asking:

For this Red Team,

- Do we know “what we are after” (the end-goal)?

- Where do we start to pave the path towards achieving the goal?

- What “ways” can we research, plan and construct to achieve the goal.

- How do we execute the plan without being detected?

- The are the desired outcomes – noting the goal is either achieved, partially achieved or not achieved.

Please note that Pen Testing again is more like an audit and will look at “how many ways” may we gain access. But in a Red Team we are more interested in what can we gain access to in the most direct manner.

Thus, for clarity we can differentiate in more detail the core concepts and introduce another often mis-understood term:

- Pen Testing – Is “audit” focussed and creates “noise” to identify all possible security vulnerabilities and misconfigurations.

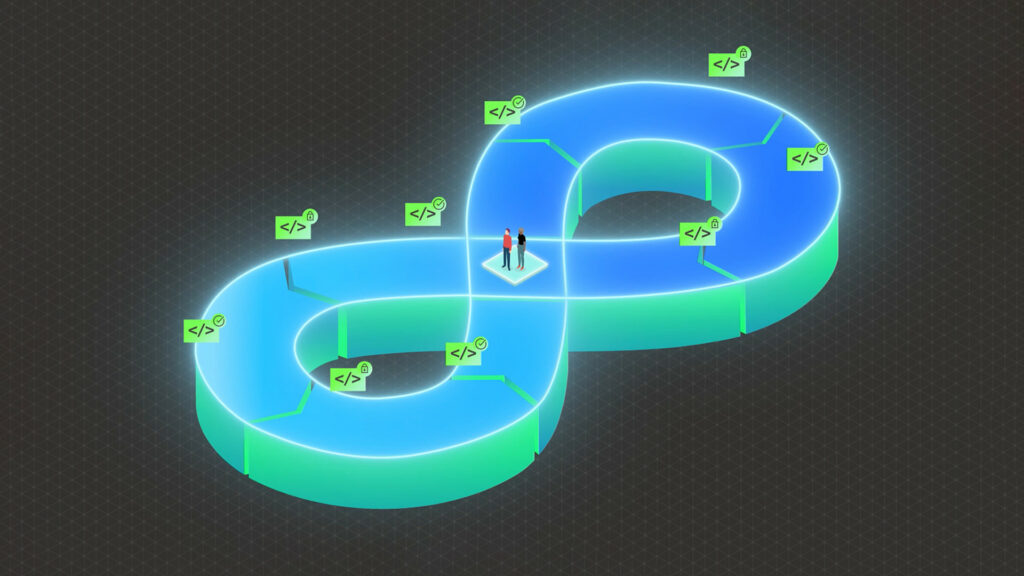

- Red Teaming – Is a “goal-oriented approach” and can create great value. More so:

- Can use simulations of being more stealthy

- It potentially uses a variety of methods (including testing / anything that will give value / foothold, social engineering/ spear phishing depending on a specific goal), all sorts of potential vectors

- Purple Teaming – This is all about assessing response and controls. Questions in this scenario to be asked are:

- Are we detected?

- How did we respond?

- How did you respond?

- How long did it take?

- How adequate are your responses and controls: Are we stopped (cannot access the goal)?

- Are we blocked (we now cannot come back)?